Audio Reactive Visualizations

TouchDesigner

Audio-reactive visualization in TouchDesigner involves creating visual elements that respond dynamically to audio input, resulting in a synchronized and immersive experience.

My Roles

Intent

Synchronize visual changes with audio elements to create a tightly coordinated audio-visual experience for the song “Shelter” by Porter Robinson.

Audio Visualization

Generative Visuals

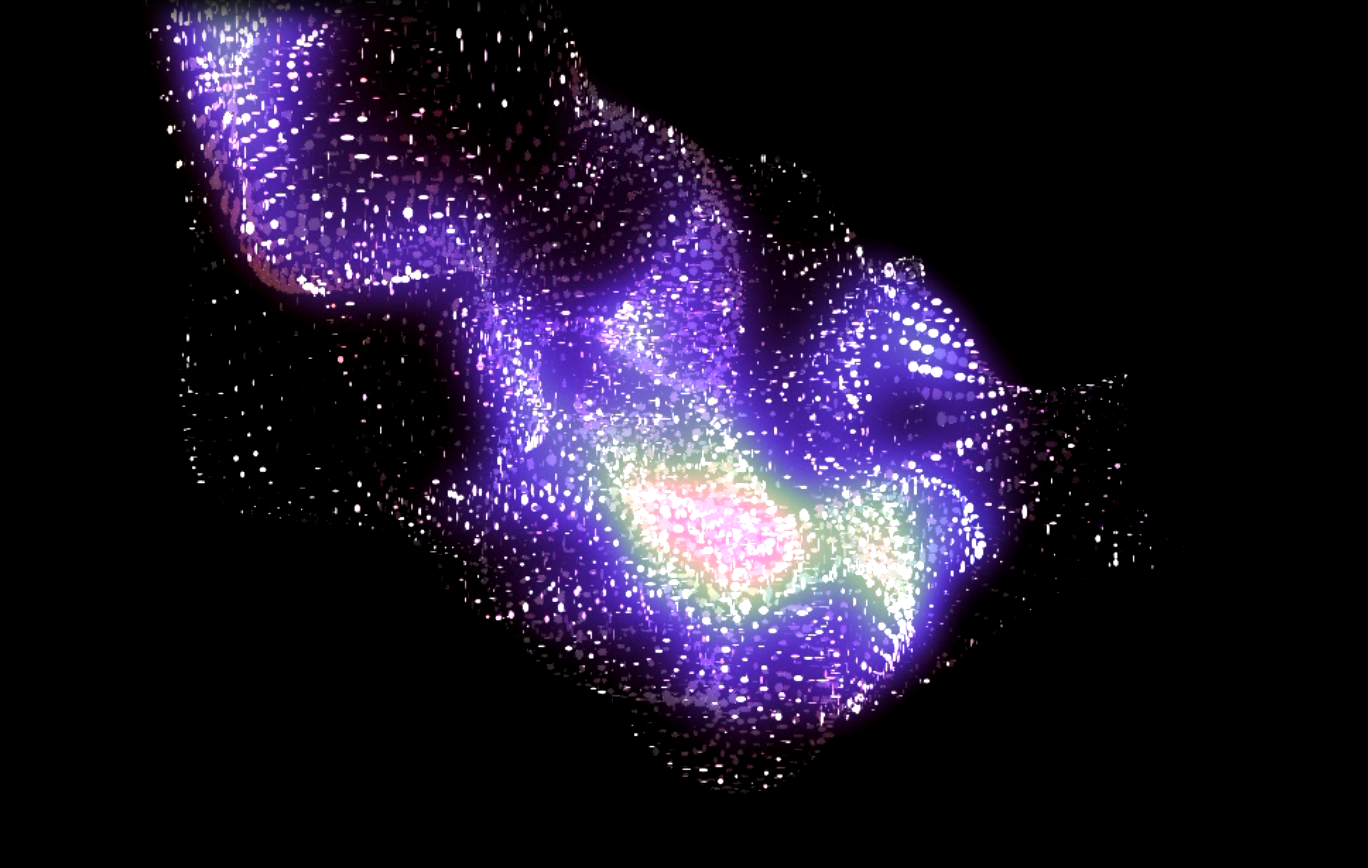

The foundational model is created through instancing, where I mapped spheres on the vertices of a plane. This arrangement forms the base upon which the visual narrative unfolds. To make this model audio reactive, I sequenced the rate at which the vertices move to the beat of the music. I first ran the audio file through a rhythm detection TOP in Touch Designer, then I linked the beat to an audio spectrum. This rhythm data was then linked to an audio spectrum, isolating the distinct audio wave associated with beat detection. By employing both the audio wave and the audio spectrum map, I harmonized the movement of the instance model. This intricate synchronization gave rise to audio-reactive shifts in color and motion, resulting in a captivating interplay between sound and visuals.